Table of Contents

FUCHS Cluster Usage

FUCHS is a general-purpose compute cluster based on Intel CPU architectures running AlmaLinux 9 and SLURM. Please read the following instructions and ensure that this guide is fully understood before using the system.

Login

An SSH client is required to connect to the cluster. On a Linux system the command is usually:

ssh <user_account>@fuchs.hhlr-gu.de

Am I connected to the right server? Please find our ssh-rsa fingerprint here:

You may receive a warning from your system that something with the security is wrong, “maybe somebody is evedropping”. Due to the upgrade of the operating system from Scientifc Linux 7.9 to AlmaLinux 9.2 the SSH server keys have changed. Please erase your old FUCHS key with

ssh-keygen -R fuchs.hhlr-gu.de

and accept the new fuchs.hhlr-gu.de key. Above you will find our unique ECDSA and ED25519 fingerprints. Some programs tend to display the fingerprint in the SHA256 or MD5 format. Just click on fuchs.hhlr-gu.de fingerprints above.

Please check with ssh-keyscan fuchs.hhlr-gu.de if the key above is the same.

On Windows systems please use/install a Windows SSH client (e.g. PuTTY, MobaXterm, the Cygwin ssh package or the built-in ssh command).

After your first login you will get the message, that your password has expired and you have to change it. Please use the password provided by CSC at the prompt, choose a new one and retype it. You will be logged out automatically. Now you can login with your new password and work on the cluster.

ulimit -t

on the command line. On the login node, any process that exceeds the CPU-time limit (e.g. a long running test program or a long running rsync) will be killed automatically.

Environment Modules

There are several versions of software packages installed on our systems. The same name for an executable (e.g. mpirun) and/or library file may be used by more than one package. The environment module system, with its module command, helps to keep them apart and prevents name clashes. You can list the module-managed software by running module avail on the command line. Other important commands are module load <name> (loads a module) and module list (lists the already loaded modules).

If you want to know more about module commands, the module help command will give you an overview.

Working with Intel oneAPI

With the command 'module avail' you are able to see all available modules on the cluster. The intel/oneapi/xxx works a kind of different, because it functions like a container. Start loading it with module load intel/oneapi/xxx and see with module avail what is inside. Now start loading your preferred module.

Example

module load intel/oneapi/2023.2.0 module avail ... # new modules available within the Intel oneAPI modulefile ----------------------------------- /cluster/intel/oneapi/2023.2.0/modulefiles --------------------- advisor/2023.2.0 dev-utilities/2021.10.0 icc32/2023.2.1 mkl32/2023.2.0 advisor/latest dev-utilities/latest icc32/latest mkl32/latest ccl/2021.10.0 dnnl-cpu-gomp/2023.2.0 inspector/2023.2.0 mpi/2021.10.0 ccl/latest dnnl-cpu-gomp/latest inspector/latest mpi/latest compiler-rt/2023.2.1 dnnl-cpu-iomp/2023.2.0 intel_ipp_ia32/2021.9.0 mpi_BROKEN/2021.10.0 compiler-rt/latest dnnl-cpu-iomp/latest intel_ipp_ia32/latest mpi_BROKEN/latest compiler-rt32/2023.2.1 dnnl-cpu-tbb/2023.2.0 intel_ipp_intel64/2021.9.0 oclfpga/2023.2.0 compiler-rt32/latest dnnl-cpu-tbb/latest intel_ipp_intel64/latest oclfpga/2023.2.1 compiler/2023.2.1 dnnl/2023.2.0 intel_ippcp_ia32/2021.8.0 oclfpga/latest compiler/latest dnnl/latest intel_ippcp_ia32/latest tbb/2021.10.0 compiler32/2023.2.1 dpct/2023.2.0 intel_ippcp_intel64/2021.8.0 tbb/latest compiler32/latest dpct/latest intel_ippcp_intel64/latest tbb32/2021.10.0 dal/2023.2.0 dpl/2022.2.0 itac/2021.10.0 tbb32/latest dal/latest dpl/latest itac/latest vtune/2023.2.0 debugger/2023.2.0 icc/2023.2.1 mkl/2023.2.0 vtune/latest debugger/latest icc/latest mkl/latest Key: loaded modulepath

Please also note, by default, Intel MPI's mpicc uses the GCC. To make Intel MPI use an Intel compiler you have to set I_MPI_CC in your environment (or use mpiicc), e.g.:

module load intel/oneapi/2023.2.0 module load compiler/2023.2.1 module load mpi/2021.10.0 export I_MPI_CC=icx

Compiling Software

You can compile your software on the login nodes (or on any other node, inside a job allocation). Several compiler suites are available. While GCC version 11.X.Y is the built-in OS default, you can list additional compilers and libraries by running module avail:

- Intel compilers

- MPI libraries

- other libraries

For the right compilation commands please consider:

To build and manage software which is not available via “module avail” and is not available as a built-in OS package, we recommend using Spack. Please read this small introduction on how to use Spack on the cluster. More information is available on the Spack webpage.

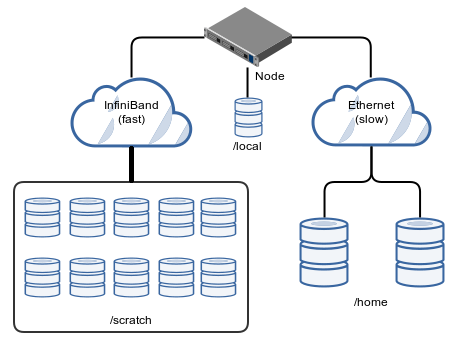

Storage

There are various storage systems available on the cluster. In this section we describe the most relevant:

- your home directory

/home/fuchs/<group>/<user>(NFS, slow), - your scratch directory

/scratch/fuchs/<group>/<user>(parallel file system BeeGFS, fast), - the non-shared local storage (i.e. unique on each compute node) under

/local/$SLURM_JOB_IDon each compute node (max. 1.4 TB per node, slow)

Please use your home directory for small permanent files, e.g. source files, libraries and executables.

By default, the space in your home directory is limited to 30 GB, and in your scratch directory to 5 TB and/or 800000 inodes (which corresponds to approximately 200000+ files). You can check your homedir and scratch usage by running the quota command on a login node.

If you need local storage on the compute nodes, you have to add the --tmp parameter to your job script (see SLURM section below). Set the amount of storage in megabytes, e.g. set --tmp=5000 to allocate 5 GB of local disk space. The local directory (/local/$SLURM_JOB_ID) is deleted after the corresponding job has finished. If, for some reason, you don't want the data to be deleted (e.g. for debugging), you can use salloc instead of sbatch and work interactively (see man salloc). Or, one can put an rsync at the end of the job script, in order to save the local data to /scratch just before the job exits:

... mkdir /scratch/fuchs/<groupid>/<userid>/$SLURM_JOBID scontrol show hostnames $SLURM_JOB_NODELIST | xargs -i ssh {} \ rsync -a /local/$SLURM_JOBID/ \ /scratch/fuchs/<groupid>/<userid>/$SLURM_JOBID/{}

Although our storage systems are protected by RAID mechanisms, we can't guarantee the safety of your data. It is within the responsibility of the user to backup important files.

Running Jobs With SLURM

On our systems, compute jobs and resources are managed by SLURM (Simple Linux Utility for Resource Management). The compute nodes are organized in the partition (or queue) named fuchs.

| Partition | Node type |

|---|---|

fuchs | Intel Broadwell CPU |

Nodes are used exclusively, i.e. only whole nodes are allocated for a job and no other job can use the same nodes concurrently.

In this document we discuss several job types and use cases. In most cases, a compute job falls under one (or more than one) of the following categories:

For every compute job you have to submit a job script (unless working interactively using salloc or srun, see man page for more information). If jobscript.sh is such a script, then a job can be enqueued by running

sbatch jobscript.sh

on a login node. A SLURM job script is a shell script containing SLURM directives (options), i.e. pseudo-comment lines starting with

#SBATCH ...

The SLURM options define the resources to be allocated for the job (and some other properties). Otherwise the script contains the “job logic”, i.e. commands to be executed.

Read More

The following instructions shall provide you with the basic information you need to get started with SLURM on our systems. However, the official SLURM documentation covers some more use cases (also in more detail). Please read the SLURM man pages (e.g. man sbatch or man salloc) and/or visit http://www.schedmd.com/slurmdocs. It's highly recommended.

Helpful SLURM link: SLURM FAQ

Your First Job Script

In fuchs you can allocate up to 120 nodes with two Intel Ivy Bridge CPUs, where each node has 20 cores (or 40 HT threads). In the following example we allocate 60 CPU cores (i.e. three nodes) and 512 MB per core for 5 minutes (SLURM may kill the job after that time, if it's still running):

#!/bin/bash #SBATCH --job-name=foo #SBATCH --partition=fuchs #SBATCH --nodes=3 #SBATCH --ntasks=60 #SBATCH --ntasks-per-node=20 #SBATCH --cpus-per-task=1 #SBATCH --mem-per-cpu=512 #SBATCH --time=00:05:00 #SBATCH --no-requeue #SBATCH --mail-type=FAIL srun hostname sleep 3

--cpus-per-task=1 | For SLURM, a CPU core (a CPU thread, to be more precise) is a CPU. |

--no-requeue | Prevent the job from being requeued after a failure. |

--mail-type=FAIL | Send an e-mail if sth. goes wrong. |

The srun command is responsible for the distribution of the program (hostname in our case) across the allocated resources, so that 20 instances of hostname will run on each of the allocated nodes concurrently. Please note, that this is not the only way to run or to distribute your processes. Other cases and methods are covered later in this document. In contrast, the sleep command is executed only on the head1) node.

Although nodes are allocated exclusively, you should always specify a memory value that reflects the RAM requirements of your job. The scheduler treats RAM as a consumable resource. As a consequence, if you omit the 2) Moreover, jobs are killed through SLURM's memory enforcement when using more memory than requested.

--nodes parameter (so that only the number of CPU cores is defined) and allocate more memory per core than there actually is on a node, you'll automatically get more nodes if the job doesn't fit in otherwise.

After saving the above job script as e.g. jobscript.sh, you can submit your job by running

sbatch jobscript.sh

on the command line. The job's output streams (stdout and stderr) will be joined and saved to slurm-ID.out, where ID is a SLURM job ID, which is assigned automatically. You can change this behavior by adding an --output and/or --error argument to the SLURM options.

Job Monitoring

For job monitoring (to check the current state of your jobs) you can use the squeue command. Depending on the current cluster utilization (and other factors), your job(s) may take a while to start. You can list the current queuing times by running sqtimes on the command line.

If you need to cancel a job, you can use the scancel command (please see the manpage, man scancel, for further details).

Goethe-HLR users

All users with an account at Goethe-HLR and FUCHS, have to add

#SBATCH --account=<your FUCHS group> #SBATCH --partition=fuchsto the job script, when they want to use the FUCHS cluster.

Node Types

On FUCHS only one type of compute nodes is available. There are

| Number | Type | Vendor | Processor | Processor x Core (HT) | RAM [GB] |

|---|---|---|---|---|---|

| 194 | dual-socket | Intel | Xeon Ivy Bridge E5-2670 v2 | 2×10 (2×20) | 128 |

Per-User Resource Limits

On FUCHS, you have the following limits for the partition fuchs:

| Limit | Value | Description |

|---|---|---|

MaxTime | 21 days | the maximum run time for jobs |

MaxJobsPU | 10 | max. number of jobs a user is able to run simultaneously |

MaxSubmitPU | 20 | max. number of jobs in running or pending state |

MaxNodesPU | 50 | max. number of nodes a user is able to use at the same time |

MaxArraySize | 1001 | the maximum job array size |

Hyper-Threading

On compute nodes you can use Hyper-Threading. That means, in addition to each physical CPU core a virtual core is available. SLURM identifies all physical and virtual cores of a node, so that you have 40 logical CPU cores on an Intel Ivy Bridge node. If you don't want to use HT, you can disable it by adding

| Node type | sbatch command |

|---|---|

| Ivy Bridge | #SBATCH --extra-node-info=2:10:1 |

to your job script. Then you'll get half the threads per node (which will correspond to the number of cores). This can be beneficial in some cases (some jobs may run faster and/or more stable).

Bundling Single-Threaded Tasks

Note: Please also see the Job Arrays section below. Because only full nodes are given to you, you have to ensure, that the available resources are used efficiently. Please combine as many single-threaded jobs as possible into one. The limits for the number of combined jobs are given by the number of cores and the available memory. A simple job script to start 20 independent processes may look like this one:

#!/bin/bash #SBATCH --partition=fuchs #SBATCH --nodes=1 #SBATCH --ntasks=20 #SBATCH --cpus-per-task=1 #SBATCH --mem=100g #SBATCH --time=01:00:00 #SBATCH --mail-type=FAIL # # Replace by a for loop. ./program input01 &> 01.out & ./program input02 &> 02.out & ... ./program input20 &> 20.out & # Wait for all child processes to terminate. wait

In this (SIMD) example we assume, that there is a program (called program) which is run 20 times on 20 different inputs (usually input files). Both output streams (stdout and stderr) of each process are redirected to a file N.out. A job script is always executed on the first allocated node, so we don't need to use srun, since exactly one node is allocated. Further we assume that the executable is located in the same directory where the job was submitted (the initial working directory).

Job Arrays

If you have lots of single-core computations to run, job arrays are worth a look. Telling SLURM to run a job script as a job array will result in running that script multiple times (after the corresponding resources have been allocated). Each instance will have a distinct SLURM_ARRAY_TASK_ID variable defined in its environment.

Due to our full-node policy, you still have to ensure, that your jobs don't waste any resources. Let's say, you have 400 single-core tasks. In the following example 400 tasks are run inside a job array while ensuring that only 20-core nodes are used and that each node runs exactly 20 tasks in parallel.

#!/bin/bash #SBATCH --partition=fuchs #SBATCH --nodes=1 #SBATCH --ntasks=20 #SBATCH --cpus-per-task=1 #SBATCH --mem-per-cpu=2000 #SBATCH --time=00:10:00 #SBATCH --array=0-399:20 #SBATCH --mail-type=FAIL my_task() { # Print the given "global task number" with leading zeroes # followed by the hostname of the executing node. K=$(printf "%03d" $1) echo "$K: $HOSTNAME" # Do nothing, just sleep for 3 seconds. sleep 3 } # # Every 20-task block will run on a separate node. for I in $(seq 20); do # This is the "global task number". Since we have an array of # 400 tasks, J will range from 1 to 400. J=$(($SLURM_ARRAY_TASK_ID+$I)) # Put each task into background, so that tasks are executed # concurrently. my_task $J & # Wait a little before starting the next one. sleep 1 done # Wait for all child processes to terminate. wait

If the task running times vary a lot, consider using the thread pool pattern. Have a look at GNU parallel, for instance.

OpenMP Jobs

For OpenMP jobs, set the --cpus-per-task parameter. You could specify a --mem-per-cpu value. But in this case you have to divide the total RAM required by your program by the number of threads. E.g. if your application needs 4000 MB and you want to run 20 threads, then you have to set --mem-per-cpu=200 (4000/20 = 200). However, it's also possible to specify the total amount of RAM using the --mem parameter. Don't forget to set the OMP_NUM_THREADS environment variable. Example:

#!/bin/bash #SBATCH --partition=fuchs #SBATCH --nodes=1 #SBATCH --ntasks=1 #SBATCH --cpus-per-task=20 #SBATCH --mem=4000 #SBATCH --mail-type=ALL #SBATCH --time=48:00:00 export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK ./your_omp_program

MPI Jobs

Remember: Nodes are used exclusively. Each node has many CPU cores. If you want to run small jobs (i.e. where more than one job could be run on a single node concurrently), consider running more than one computation within a job. Otherwise it will most likely result in a waste of resources and will lead to a longer queueing time (for you and others).

See also: http://www.schedmd.com/slurmdocs/faq.html#steps

As an example, we want to run a program that spawns 80 MPI ranks and where 1200 MB of RAM are allocated for each rank.

#!/bin/bash #SBATCH --partition=fuchs #SBATCH --nodes=4 #SBATCH --ntasks=80 #SBATCH --ntasks-per-node=20 #SBATCH --cpus-per-task=1 #SBATCH --mem-per-cpu=1200 #SBATCH --mail-type=ALL #SBATCH --extra-node-info=2:10:1 # Don't use this with Intel MPI. #SBATCH --time=48:00:00 module load mpi/.../<version> mpirun ./your_mpi_program

--mem-per-cpu is used for a job allocation and --mem-per-cpu times the number of CPUs on a node is greater than the total memory of that node. Please see Memory Allocation.

Some MPI installations support the srun command (instead of or in addition to mpirun), e.g.:

[...] module load mpi/.../<version> srun --mpi=pmix ./your_mpi_program

MPI implementations are typically designed to work seamlessly with job schedulers like Slurm. When you launch MPI tasks with mpirun (or srun) inside your job script, the MPI library uses the information provided by Slurm (via environment variables or other means) to determine the communication topology and allocate processes accordingly.

Hybrid Jobs: MPI/OpenMP

MPI example script (20 ranks, 5 threads each and 200 MB per thread, i.e. 1 GB per rank; so, for 20*5 threads, you'll get five 20-core nodes):

#!/bin/bash #SBATCH --partition=fuchs #SBATCH --ntasks=20 #SBATCH --cpus-per-task=5 #SBATCH --mem-per-cpu=200 #SBATCH --mail-type=ALL #SBATCH --extra-node-info=2:10:1 # Don't use this with Intel MPI. #SBATCH --time=48:00:00 module load mpi/.../<version> export OMP_NUM_THREADS=5 # When using MVAPICH2 disable core affinity. export MV2_ENABLE_AFFINITY=0 mpirun -np 20 ./example_program

Please note, that this is just an example. You may or may not run it as-it-is with your software, which is likely to have a different scalability.

You have to disable the core affinity when running hybrid jobs with MVAPICH23). Otherwise all threads of an MPI rank will be pinned to the same core. Our example now includes the command

export MV2_ENABLE_AFFINITY=0

which disables this feature. The OS scheduler is now responsible for the placement of the threads during the runtime of the program. But the OS scheduler can dynamically change the thread placement during the runtime of the program. This leads to cache invalidation, which degrades performance. This can be prevented by thread pinning (topic not covered here).

When using Intel MPI, please also check its pinning parameters.

Memory Allocation

Normally the memory available per CPU thread is calculated by the whole amount of RAM divided by the number of threads. For instance 128GB / 40 threads = 3.2GB per thread. Keep in mind that the FUCHS cluster provides two threads per core. Now imagine you need more memory, let's say 8192MB per task. Type scontrol show node=<node> and look for 'mem'. Another way is to login into an Ivy Bridge Node and type free -m. In both cases you will determine 128768M(B) of memory. Now we calculate how many processes we can launch on one node like this: 128782MB/8192MB=15.72… . With this result we can determine how many nodes we need. In the following example we like to have 69 processes à 8192MB.

#!/bin/bash

#SBATCH --job-name=<your_job_name>

#SBATCH --partition=fuchs

#SBATCH --ntasks=69 # Whole amount of processes we have.

#SBATCH --cpus-per-task=1 # Only one task per CPU.

# #SBATCH --mem-per-cpu=8192 # We can't use this argument here, because it ends up with an error

# message, therefore it's commented out.

#SBATCH --mem=0 # Use this argument instead, which means "full memory of the node".

#SBATCH --ntasks-per-node=15 # We have calculated and rounded that we need 15 per node.

srun hostname

If everything works fine you were granted 5 nodes. For example 4 nodes à 14 tasks and 1 node à 13 tasks, i.e. 56 tasks + 13 tasks = 69 tasks, as requested.

Local Storage

On each node there is up to 1.4 TB of local disk space (see also Storage). If you need local storage, you have to add the --tmp parameter to your SLURM script. Set the amount of storage in megabytes, e.g. set --tmp=5000 to allocate 5 GB of local disk space. The data in the local directory (/local/$SLURM_JOB_ID) is deleted after the corresponding batch job has finished.

The salloc Command

For interactive workflows you can use SLURM's salloc command. With salloc almost the same options can be used as with sbatch, e.g.:

[user@fuchs ~]$ salloc --nodes=4 --time=0:45:00 --mem=64g --partition=fuchs salloc: Granted job allocation 1122971 salloc: Waiting for resource configuration salloc: Nodes node27-[012-015] are ready for job [user@fuchs ~]$

Now you can ssh into the nodes that were allocated for the job and run further commands, e.g.:

[user@fuchs ~]$ ssh node27-012 [user@node27-012 ~]$ hostname node27-012 [user@node27-012 ~]$ exit logout Connection to node27-012 closed.

Or you can use srun for running a command on all allocated nodes in parallel:

[user@fuchs ~]$ srun hostname node27-013 node27-012 node27-015 node27-014

Finally you can terminate your interactive job session by running exit, which will free the allocated nodes:

[user@fuchs ~]$ exit exit salloc: Relinquishing job allocation 1122971